Twenty Trillion Synapses Part Two: Moore’s Law Strikes Back

July 27, 2021

One day computers will match the powerful synaptic capacity and incredibly fast recall of the human brain. Having hardware with the equivalent of 20 trillion synapses instantly accessible would revolutionize society as we know it, powering a whole host of new applications and industries we cannot even imagine today.

In Part One of this series, we discussed how in order to meet this vision, current AI hardware needs to experience the same rapid improvements that personal computers saw in the second half of the 20th century: a 100,000x increase in capacity (which we can think about as the weight counts of AI algorithms) while maintaining instant accessibility (the ability to run complex AI algorithms and process information in microseconds to milliseconds) to turn this vision into a reality. Part Two will explain why what worked in the past — digital approaches and Moore’s Law scaling — will not get us there.

Digital Compute Cannot Keep Up

Memory presents an insurmountable barrier to building the trillions-of-synapses product vision using conventional technology and digital designs. The memory bandwidth, storage density, and power efficiency requirements of the future are so far beyond the slowing pace of improvements of conventional technology and digital designs.

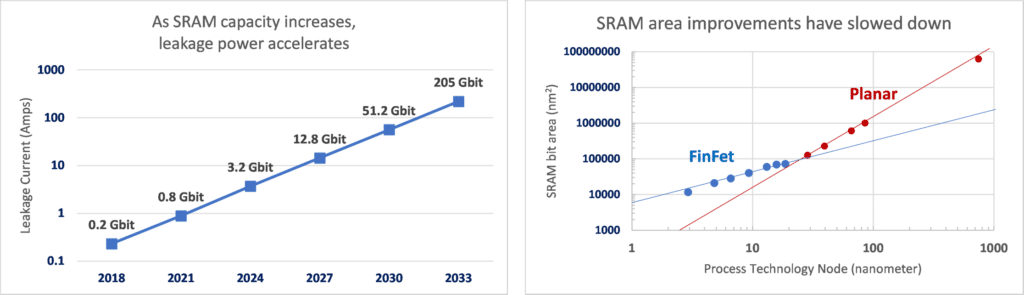

Starting with memory: the only mainstream digital memory that is power efficient during read operations and is fast enough to support instant computation of neural networks is SRAM. Unfortunately, the pace of improvement in the density of SRAM has slowed down significantly and will continue to decelerate. At the same time, the power leakage of SRAM cells has only been kept at bay, and tricks like lowering the supply voltage are running out. This means that when a stored neural network is not being used, it will still consume a substantial amount of power. In a trillions-of-synapses product built with digital technology, the product would be room-sized and the idle power draw would be astronomical due to the density and leakage power challenges.

Left: as the amount of memory that can fit on-chip goes up, the leakage power increases exponentially. To get anywhere even close to our trillions-of-synapse vision would require thousands of watts of power. Right: When transistor technology switched from Planar to FinFet, the pace of improvements slowed down. Once FinFets run out of steam in 1-3nm, a further slowdown of pace is very possible. Source: IEDM

The other major challenge with the trillions-of-synapses vision is the scale of computation required: 100 to 1,000 trillion math operations per second in a low-power and cost-effective device, which requires a 100-1000X improvement over current digital approaches. As with memory, the pace of improvements in compute energy and compute density has slowed rapidly in recent manufacturing process generations. This trend is going in the complete wrong direction to support the trillions-of-synapses vision.

Digital Compute Costs are Spiraling Out of Control

The increasing cost of manufacturing is another hurdle. Process node improvements have been slowing down, fewer companies are adopting the latest technology nodes, and there are now only a few manufacturers who can follow the Moore’s Law trend, down from dozens in the 1990s.

There is a very good reason: the fixed capital costs of the equipment and facilities for state-of-the-art chip manufacturing are increasing exponentially. The fixed costs of chip design, especially mask set prices, are also skyrocketing, likely reaching above $100M in the 1 to 3 nm range. Companies must amortize these fixed costs over their products, making it harder and harder to achieve improvements in functionality per dollar in recent years.

In response, some companies are now only pushing the most performance-critical sub-systems to the newest process nodes, while keeping the remainder on older, more economical nodes. This chiplet approach works well for processors and GPUs, but in the AI vision we outlined above, it does not. Everything on the chip (including the trillions of memory cells) would need to realize massive performance, cost, and power improvements. Due to the immense cost challenges, business as usual for the last 50 years with Moore’s Law scaling will not cut it. Something radical is needed.

Digital Compute is Overkill

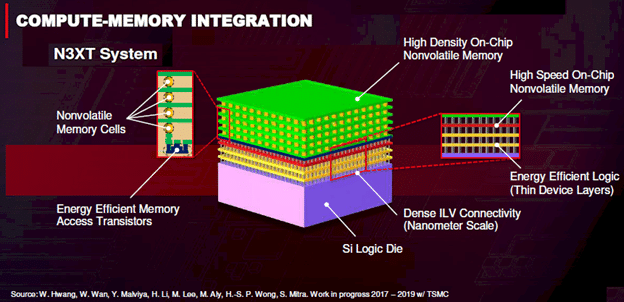

To address the conflicting challenges of memory bandwidth/latency and memory power for the trillions-of-synapses vision, some companies have proposed 3D integrated circuits with many layers of zero-leakage non-volatile memory on top of core compute modules. This technology is incredibly compelling for AI as it solves the memory density and leakage challenges and can enable trillions of synapses in a small device. Meshing this dense memory with digital compute approaches, however, results in major bottlenecks in performance and power. The technical reason for these bottlenecks is that memory like RRAM is resistive and does not actively drive the output lines like SRAM or have huge capacitors like DRAM. The solution is recognizing that digital compute is overkill.

Above: TSMCs vision of of the future of hardware is many layers of high density non-volatile memory on top of logic. This technology aligns extremely well with Mythic’s vision of the future, and analog compute would give a 1000X boost in efficiency over digital compute.

The positives of digital is that it is precise, controlled, and predictable. For a processor running an operating system, compiled code, and communicating to other systems, this precision is critical. When reading trillions of weights out of a large stack of 3D non-volatile memory for instant computation of an AI algorithm, the precision would come at a huge cost in performance and power.

AI does not need this precision. Analog computing is a form of approximate computing – much like the brain – that can deliver far greater efficiency, which is needed to drive the continued improvement of AI. Analog compute operating in tandem with incredibly dense non-volatile memory is far faster, 1000x more energy efficient, and can pack 8 times more information into the memory.

What’s Next

In the upcoming Part Three of this blog series, we will highlight how the industry’s future vision of 3D memory and processor integration gels perfectly with Mythic’s pioneering analog compute technology, which will help deliver on the vision of trillions-of-synapses in the palm of your hand.

Want to hear from Mythic?

Get the latest updates on news, events and blog post notifications! Subscribe to our What’s New Newsletter.

You can unsubscribe at any time by clicking the link in the footer of our emails. For information about our privacy practices, please view our privacy policy here.