Twenty Trillion Synapses Part 3: The Path Forward

September 30, 2021

What matters most to enable the intelligent future, where every device has a massive amount of AI capabilities?

In part 1, we outlined the trillions-of-synapses vision where integrating a cost-effective low-power processor into every device, with trillions of synapses instantly accessible and computable, is possible. This is a 100,000x step change in capabilities compared to today’s AI processors.

In part 2, we detailed how Moore’s Law scaling of digital compute, which has driven the last 50 years of processor innovation, will not get us to this vision. New disruptive technologies are needed to address the challenges of realizing this vision.

Memory Matters Most

So what matters most to enable the intelligent future where every device has a massive amount of AI capabilities?

The short answer is that memory is the most important thing. Before diving into this, it’s worth highlighting other factors that get a lot of attention in the AI hardware space, but are actually secondary in importance to the future of AI hardware.

– Speed and energy of arithmetic: Adding and multiplying numbers at high speed and low energy is not a significant challenge, even with digital logic. Disruptive hardware for AI must solve the memory bottleneck, which is much more critical – not just find clever ways of doing computation.

– Compute precision: 8 bits of compute precision is generally sufficient to ensure acceptable accuracy. Higher precisions can be achieved with low precision building blocks through error correction and partial products. Eliminating the memory bottleneck is immensely valuable, even if additional arithmetic work is needed to achieve the necessary precision.

– Flexible architecture: A flexible and generic architecture is necessary. It must be designed from first principles to ensure it is future proof for the rapidly evolving world of neural networks. Without solving the memory bottleneck, however, great architectures will not go far.

– Quality software: A quality software framework is needed to enable developers to realize their AI visions. The focus must be on ease of use and compatibility, along with development velocity for moving from concept to running successfully in a production environment. Of course, software must run on hardware, so a rich hardware roadmap is still required to advance capabilities.

Getting back to what matters most, it’s all about memory and can be boiled down to two factors: memory density and the dataflow from memory to computation.

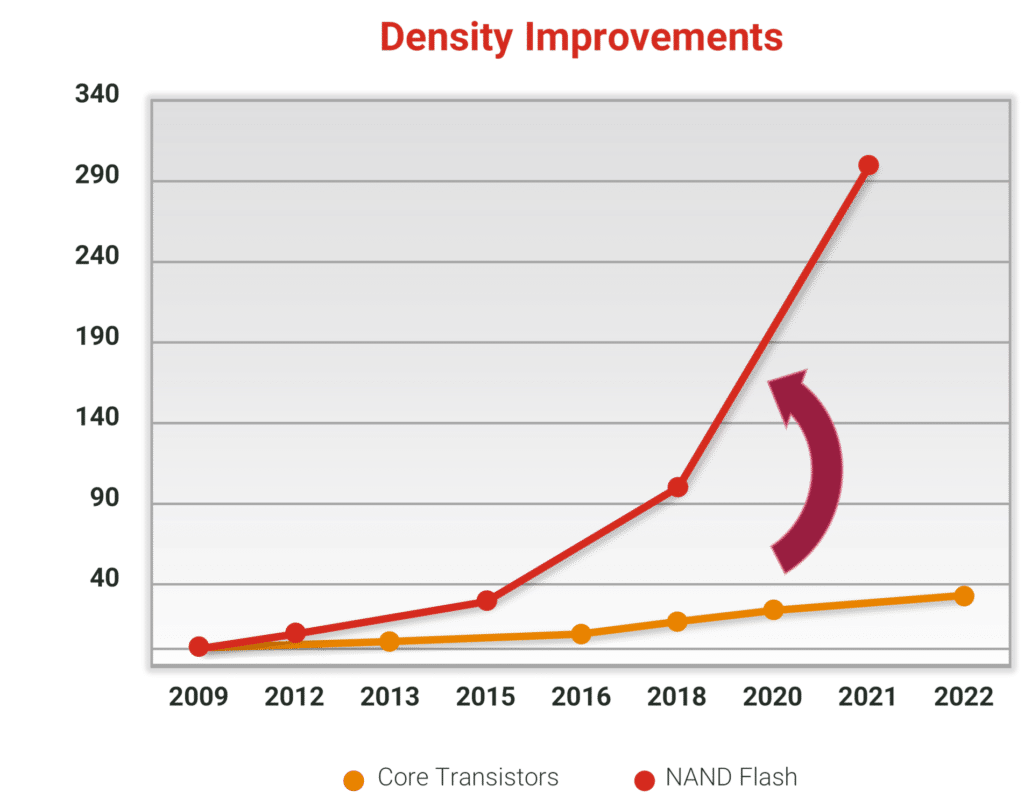

Memory Density: Non-volatile Memory to the Rescue

One of the most important aspects of AI hardware is how much memory can be packed into a processor per millimeter square, and how much power the memory draws. In Part 2, we showed that in the digital world, the mainstream, go-to memories – SRAM and DRAM – burn too much power, take up too much chip area, and are not improving fast enough to drive the necessary improvements. The solution is non-volatile memory (NVM), where even with today’s technology the densities achievable are astounding and zero-power retention solves the power leakage problem.

The most prevalent non-volatile memory today is flash memory, which is a mature technology that has revolutionized both server and embedded computing, with more than 100 exabytes of storage out in the world. The density of flash memory is incredible; the most advanced flash systems have 176 layers and can achieve up to 100 gigabytes (GB) per mm2. 100GB can fit on the tip of a pencil and two terabytes (TB) can fit on a pencil eraser. Flash is not normally associated with memories like DRAM and SRAM due to speed, power consumption, read-disturb, and high voltages. As we’ll discuss in the next section, this is where analog compute comes in.

New non-volatile memories that have recently been commercialized, such as RRAM, also have strong potential. Memories like RRAM can be built very densely in three dimensions (e.g. 3D Xpoint), store multi-level values for analog compute, and can be rewritten trillions of times. This opens the door for on-device learning, rapid application switching, and even analog neural network training.

From the perspective of storage, the trillions-of-synapses vision is achievable. The real challenge is retrieving data from these ultra-dense arrays and performing computation. This is where a compelling new technology – analog compute – comes in.

Memory to Computation Flow: Analog is the Answer

The memory density challenge of the trillions-of-synapses vision is solvable, but there is still the challenge of instantly computing neural networks at very low latency and power consumption. This comes down to accessing a huge number of values from memory and performing arithmetic computations at a rate of tens to hundreds of trillions of times per second. In digital AI processors, the weights and input data are read out of memory and sent to arithmetic units. Even with conventional memory like SRAM, this compute path is a difficult performance and energy bottleneck. With ultra-dense non-volatile memory that is very slow and power hungry in digital modes, the performance bottlenecks would be insurmountable.

At Mythic, we’ve pioneered technology to supercharge non-volatile memory and solve the memory bottleneck with analog compute-in-memory. With this approach, we can perform arithmetic inside non-volatile memory cells through manipulating and combining small electrical currents. These in-place math operations happen in parallel across the entire memory banks in a very fast and low power manner. For more details, please see our CTO’s blog post here.

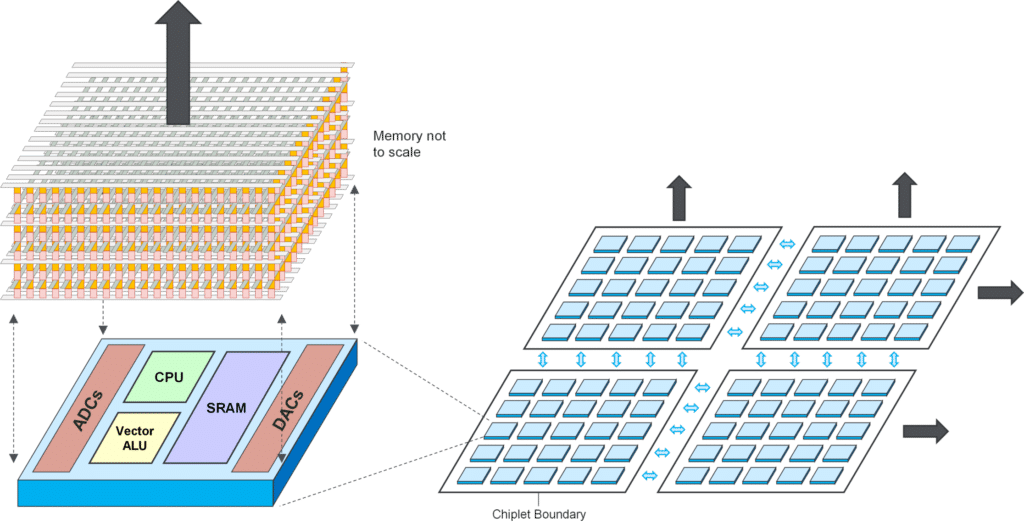

The figure above shows what a system would look like. Many layers of 3D non-volatile memory are stacked on top of the chip, with up to a hundred billion synapses per millimeter square. Below the memory are incredibly dense and low-power ADCs, DACs, as well as conventional digital processors and memory. A chiplet strategy can be used to build a flexible, scalable, and easily integratible product for both edge and datacenter, while keeping yield high.

The roadmap to get here starts with Mythic’s existing products based on embedded NOR flash, with the benefits of analog compute proven with 10X advantages in cost and power. To realize the trillions-of-synapses vision, further development is needed to enable analog compute in NAND flash and RRAM, and integrate 3D memory technology with advanced chip processes. AI training hardware and infrastructure also must make big leaps to enable faster development cycles and better use of the massive amount of data out in the world. Nevertheless, the base technology of ultra-dense non-volatile memory and analog compute is proven. The future of AI hardware is very bright, and Mythic is poised to drive an incredible future of intelligent devices.

In Conclusion

At Mythic, we’re on the journey to trillions-of-synapses to enable uncharted amounts of intelligence in every device. We have pioneered the only path to this vision: analog compute-in-non-volatile memory. We’ve released our flagship AI accelerator, the M1706, and are already hard at work developing the future in analog compute. Stay tuned as we announce many new exciting products and technology breakthroughs to come!

Want to hear from Mythic?

Get the latest updates on news, events and blog post notifications! Subscribe to our What’s New Newsletter.

You can unsubscribe at any time by clicking the link in the footer of our emails. For information about our privacy practices, please view our privacy policy here.