The Future of AI: Twenty Trillion Synapses

June 29, 2021

This is the first of a three part series on the future of AI with analog compute and is part of the longer series of blog posts on why Mythic pioneered analog compute. Unlike past articles that focused on our current products, we will be looking forward many years. If you want to read the other articles in the series, please check out our blog page.

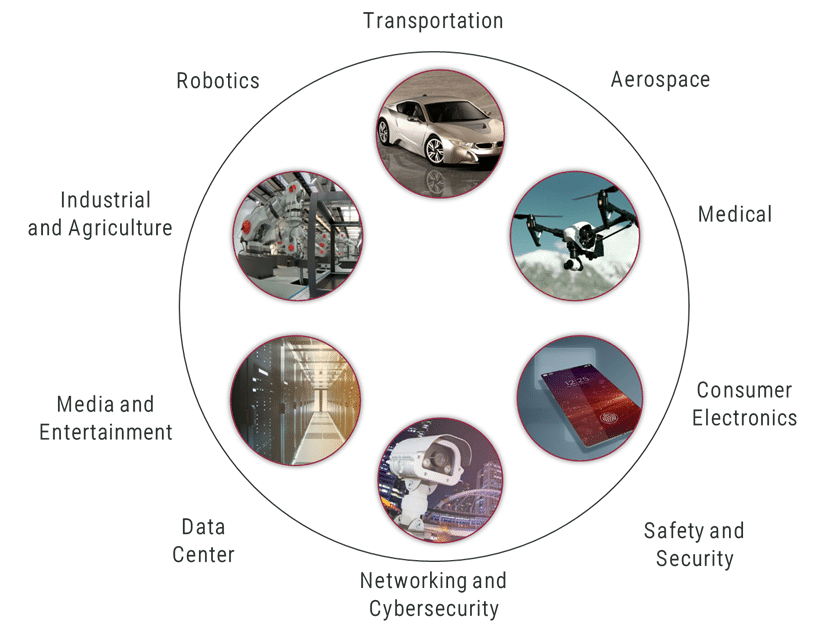

When questions about the future impact of AI come up, the best answer is that every piece of technology that is part of our lives will improve. Technology has long been the driver of societal improvements: electricity, the automobile, the PC revolution massively improving productivity, and the internet and mobile revolutions bringing the world closer together and making a massive amount of information available instantly. AI will bring improvements with a whole new set of adjectives – intuitive, accurate, healthier, safer, engaging, informative, efficient, and so on. The applications for neural network inference have an incredibly broad reach across the products and systems of everyday life around the world. The table below only scratches the surface of what is possible and what will undergo radical transformation and improvements.

A major limiting factor of AI today is hardware. As we have seen before in the industry, as hardware becomes more capable, software capabilities increase alongside it. We will need to see a massive improvement in AI hardware order of 100,000X to realize the intelligent future. These improvements must span performance, power, and cost. We know this by looking at the past. The early days of computers were focused on running a single application at a time. The early days of the internet were sharing data and email among researchers. Many envisioned the future that we know now, but the hardware was not there yet. Likewise with AI, we currently focus on single use cases that bog down modern hardware, yet the tantalizing future potential is apparent with the demand increasing for personalized AI models, many AI applications models running in parallel, and the ability to deploy incredibly powerful new models that are rapidly increasing in size to trillions of parameters.

What Matters for AI Hardware

When thinking about AI hardware in the decades to come, there are two critical concepts worth focusing on: capacity and instant accessibility.To understand these, we can look at our own personal intelligence hardware: the brain.

The first concept is capacity. Humans are at the top as far as intelligence goes and the main reason is the capacity of our brains, which biologically speaking is how many synapses and neurons it contains. The equivalent concept in AI hardware is the number of neural network weights (also called parameters or coefficients). Currently, weight counts of AI algorithms range from 5M to 100M for computer vision tasks like image recognition and 500M to 100B for speech and language understanding. In the future, these numbers will likely be much larger.

The second concept is instant accessibility. Our brain does not store its knowledge in separate external memory or storage. There is no concept of loading a program from storage and running it. Instead, all the stored information in the brain is instantly accessible and locally computable in microseconds to milliseconds.

Taking these two concepts of capacity and instant accessibility, lets now paint a picture of what 100,000X improvements in AI hardware will look like.

Far Edge

Picture a single chip that can store one trillion synapses, and stored algorithms are instantly accessible and can run in milliseconds. The power is less than one Watt active and can quickly enter milliwatt (or even microwatt) sleep states, meaning long battery life for small autonomous systems, mobile devices, and wearables. It runs AI algorithms that are far more capable than we know today and will enable deep understanding of the scene and environment, rich interaction with the user, immersive displays for gaming, entertainment, and user interfaces, and will enable powerful productivity features for enterprise, medical, and security markets.

Edge Hub

Imagine 20 trillion synapses instantly accessible with the form factor and power consumption of a tablet computer. This would represent a personalized, local, and private intelligence hub that has 100,000x times more AI capability than today’s smart home products. Five of these combined would match the synaptic capacity and blazingly fast recall ability of the human brain (it’s not an apples-to-apples comparison by any means, but it’s still exciting to think of the scale of what may be possible).

While it’s difficult to imagine what the future will bring with such capabilities, similar to the difference between computing in 1960 and 2020, the future will certainly be very exciting. What will propel semiconductor industry to new heights in the coming years and decades will be delivering continuous improvements in AI compute hardware, and enabling the visionaries to build exciting applications and experiences on top of it.

Throwing Down the Gauntlet

What we have outlined is a 100,000X improvement in current technology that represents an industry-defining engineering challenge with enormous implications for society. The good news is that we have seen this before. 100,000X improvements have been realized in many areas in the compute industry over the last fifty years, including compute power, storage capability, power efficiency, data transmission speeds, and cost. The bad news is that these improvements have relied on Moore’s Law scaling and in recent years the improvements have slowed down.

In the next blog post, we will dive deeper into Moore’s Law. We have relied on it extensively for the technology boom of the last 50 years, but where will it take us going forward for AI? Will it get us to our trillions-of-synapses vision? Spoiler-alert: the answer is no, and our third blog post will discuss how analog compute will get us there.

Want to hear from Mythic?

Get the latest updates on news, events and blog post notifications! Subscribe to our What’s New Newsletter.

You can unsubscribe at any time by clicking the link in the footer of our emails. For information about our privacy practices, please view our privacy policy here.