An Evolution in Power Management with Mythic’s Analog Matrix Processor

April 28, 2021

Managing power consumption is one of the toughest challenges for designers of AI-enabled products and systems. Fans, coolers, large enclosures, power delivery, and big batteries can quickly drive up the size and cost of products and push desired product features out of the range of feasibility. Mythic Analog Matrix Processors (Mythic AMP™) are designed to solve this challenge, delivering significant power advantages at all times: peak load, when nothing is happening, and everywhere in between. This allows companies to design cost-effective products that leverage the disruptive power of state-of-the-art AI.

Today’s digital compute approaches focus on optimizing for peak benchmarks and have only delivered incremental advances to chip architectures. Analog compute offers a much better alternative for high-end edge AI requirements. Analog compute crushes the memory bottleneck in a fundamental way, while also creating second order effects that lead to major advantages in system-level power, performance, and cost.

This post focuses on managing power consumption, starting with the large drop in computation energy from computing inside flash memory arrays with tiny currents. We then look at the second order effects analog compute has on power, which allow companies to create a unique and compelling product from the far edge to the heart of a hyper scale datacenter.

It starts with the multiply-accumulate

The power advantages of analog compute at the lowest level come from being able to perform a massively parallel vector-matrix multiplication with the matrix stored inside of flash memory arrays. Tiny electrical currents are steered through a flash memory array that stores reprogrammable neural network weights, and the result is captured through analog-to-digital converters (ADCs). By carefully setting numerical ranges and leveraging analog compute for 8-bit and below inference, the analog-to-digital and digital-to-analog energy overhead can be maintained as a small portion of the overall power budget and a large drop in compute power can be achieved. There are also many second-order system level effects that deliver a large drop in power. For example, when the amount of data movement on the chip is multiple orders of magnitude lower, the system clock speed can be kept up to 10x lower than competing systems and the design of the control processor is much simpler.

From the lowest-level perspective of performing all of the math and operations for high-performance AI compute, Mythic’s analog compute systems have the best power efficiency both in the short run and long run. Analog compute is a greenfield technology with great potential, and with Mythic’s roadmap of technology improvements it will sustain AI-enabled product roadmaps for years to come.

Data movement in and out of memory

The size of neural network models creates significant challenges around power consumption. Model parameter (or weight) counts ranging from up to 100M for visual models and greater than 1B for language and knowledge-graph models are now the norm, and the trend of having many models running concurrently is increasing. Efforts to prune and compress models simply cannot keep up with the demand.

To remain cost-effective, digital systems must store the neural networks in DRAM, which consumes significant power both during active use and during idle periods. System architects spend significant effort to optimize DRAM bandwidth and maximize overall utilization of the processors, yet even the most ideal approaches do not mitigate the fact that DRAM is an expensive, inconvenient, and power hungry way to store neural network weights.

Analog compute in flash technology enables the Mythic AMP to eliminate DRAM chips for AI and have incredibly dense weight storage inside a single-chip accelerator. In addition to the cost and complexity benefits, the power consumption is significantly lower and easier to manage.

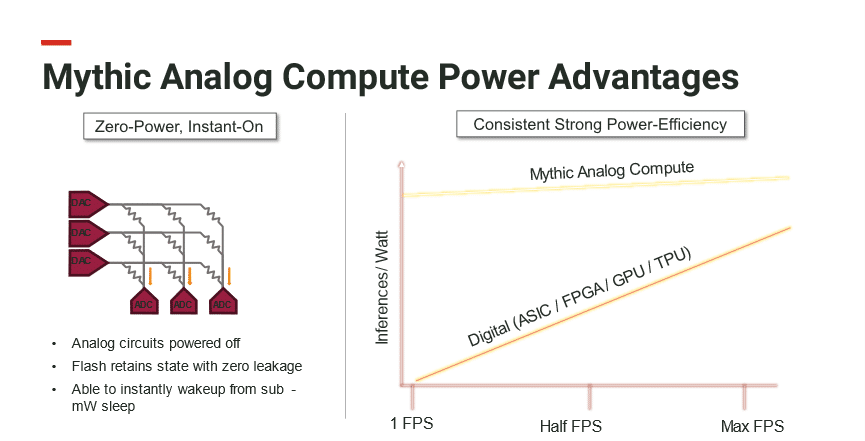

Power efficiency across all operating scenarios

Given that hardware rarely, if ever, runs at peak utilization all the time, the power consumption efficiency at different usages – e.g. half utilization, low utilization, or no utilization – matters greatly for product designers and system architects. For edge devices on batteries that are event driven (e.g. a car drives past a camera) or used infrequently (e.g. AR glasses which are kept in someone’s pocket), being able to instantly transition from an ultra-low power sleep state to GPU-levels of performance has a huge impact on product capabilities. The same applies in data centers, where utilization is never 100% and idle hardware contributes to large capital and operating costs.

A unique aspect of Mythic’s analog compute technology is the ability to compute inside of non-volatile flash memory. When the power to the memory and analog components is turned off, the power consumption goes to nearly zero, yet the state of the memory is retained. The power can then be restored, and compute can instantly resume. It would be difficult if not impossible to implement the same level of power control in high performance digital systems because the memories used in digital processors to store the neural network (DRAM and SRAM) consume significant power to maintain the memory state even when idle. This extremely scalable power efficiency with Mythic’s analog compute technology will let product designers unlock incredibly powerful new features in light, portable electronics, and will eliminate a significant amount of wasted energy in terms of power and cooling in AI datacenters.

To learn more about Mythic AMP™ and our analog compute technology, please visit: https://mythic.ai/technology/.

Want to hear from Mythic?

Get the latest updates on news, events and blog post notifications! Subscribe to our What’s New Newsletter.

You can unsubscribe at any time by clicking the link in the footer of our emails. For information about our privacy practices, please view our privacy policy here.